Sibling

Inference

Info

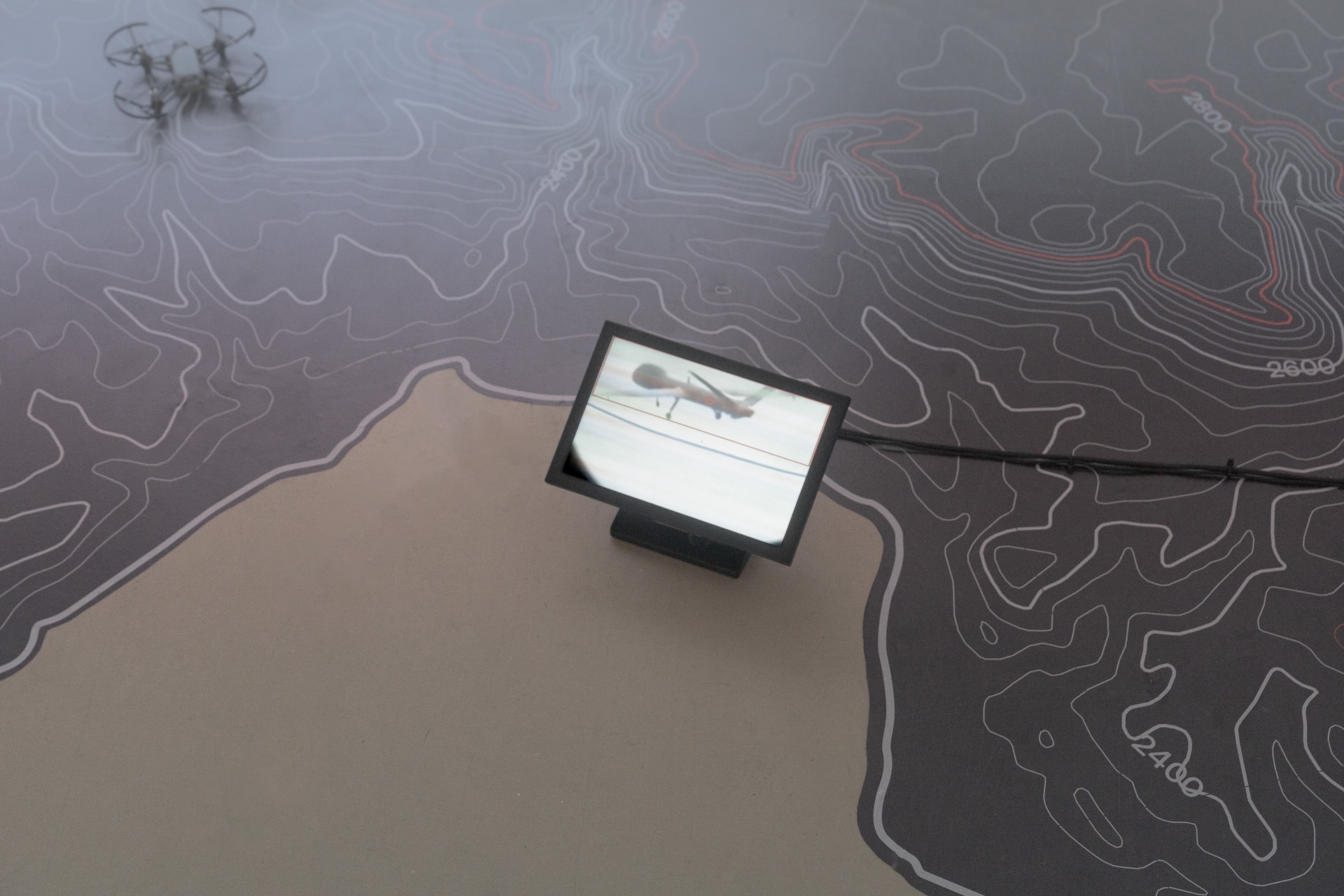

Video Installation with autonomous drone

2023

Description

The installation "Sibling Inference" questions techniques of autonomous warfare and their influence on civilian technology. Does what has fatal consequences on a larger scale repeat itself here on a smaller scale?

Autonomous machines act based on systems trained by humans and are therefore inherently biased. In their autonomy, they can cause uncontrollable damage.

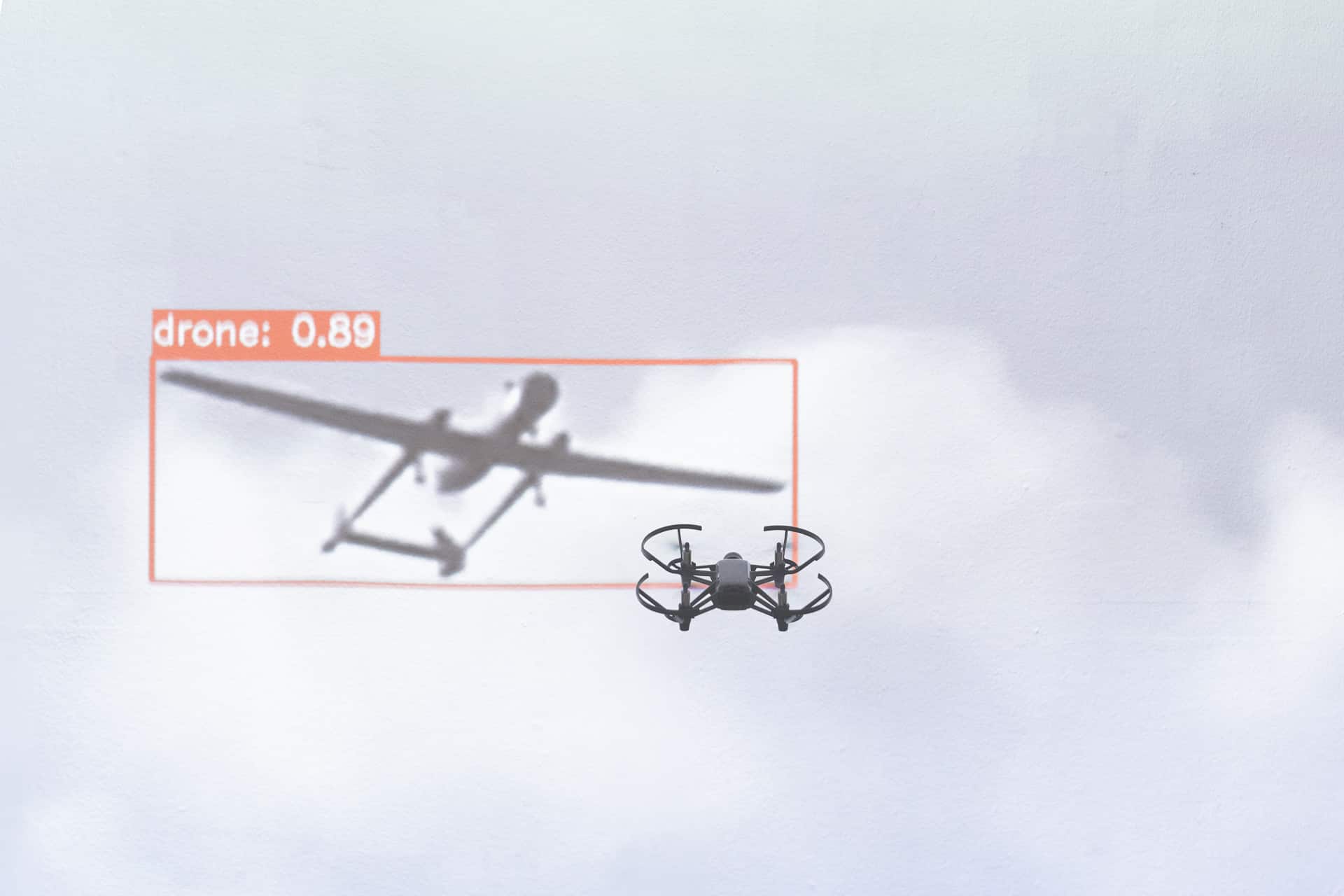

In "Sibling Inference," this problem is addressed by having a commercially available camera drone react to a military drone: controlled by the same machine learning software, it tracks the image of its "martial sister" and is compelled to behave; to position itself in relation to her.

Showings

Galerie Krinzinger – FINALS 2023 23.06.2023

©Ferdinand Doblhammer

Inherent Resolve

Since the possibility of using artificial intelligence and machine vision to recognize and track individuals exists, these technologies have also been employed by the military. By combining various datasets - drone camera images, GPS data from mobile phones, movement logs, among others - individuals can be monitored and tracked without their knowledge. A new one-sided proximity to the targets arises, which cannot perceive the drones flying over their heads with the naked eye.

Object recognition and classification software is used for surveillance in authoritarian regimes. The choice of actions/data to be observed is a political decision, which always relies on the acceptance or ignorance of those being monitored.

Since their development, techniques for automatic recognition have been subject to bias and error detection, and often major disasters could only be prevented through human intervention. With denser datasets, more complex systems, and their accompanying automation, the misconception is reinforced that these technologies can facilitate and refine our decisions, or eventually be dispensed with altogether. The combination of flying, potentially armed surveillance cameras and autonomous decisions made by artificial intelligence trained on our likeness - which already causes enough atrocities - makes Orwellian thoughts seem possible in reality. Who is responsible when the worst-case scenario occurs? When does the machine become an accomplice?

We are entering a time when the first military confrontation could take place, where autonomous machines fight against each other, with merely any human supervision. Additionally, ordinary camera drones will be used for reconnaissance and various military actions for the first time. Sibling inference raises questions about the undeniable connection between civilian and military technologies and encourages the recipient to engage in critical reflection.

More Projects ↓

Total View: Sensing SinicizationInvestigation on Chinese assimilation measures using street view images

warp-speed minnesangPerformance on network infrastructure

Decoding it the hard wayMultidisciplinary Performance with custom built reactive installation

Inherent ResolveAugmented Reality Experience of a live sized wardrone

Roomtour ARAugmented Reality Object generated out of Influencer Videos

Ephemeral BordersAR Instagram Intervention

Tribute to Brandon Bryant3D Sculpture & Animated Video

Email: ferdinand[at]doblhammer.media

Instagram: @moosiqunt

© Ferdinand Doblhammer 2024